The science-fiction future is among us as we find ourselves on the precipice of an AI revolution. As with all technological milestones, from the wheel to the computer, what we can do and how fast we can do it shift towards ever-increasing productivity. While the philosophers and keyboard warriors among us discuss the philosophical ramifications of AI, here at Big Nerd Ranch, we concern ourselves with more practical matters.

In this series, I plan on investigating ChatGPT’s answer to one of the oldest questions in human history: How can I make my life easier?

Recognizing that a robust suite of unit tests is essential for ensuring a healthy codebase isn’t rocket surgery. But let’s face it – writing tests can be tedious at times. If you’re anything like me, you’ve probably caught yourself daydreaming about having a trusty assistant to either take care of it all for you or, at the very least, lend a helping hand. We’re going to put ChatGPT through some tests today, starting with something easy to calibrate ourselves and moving on to testing a React reducer and React Component.

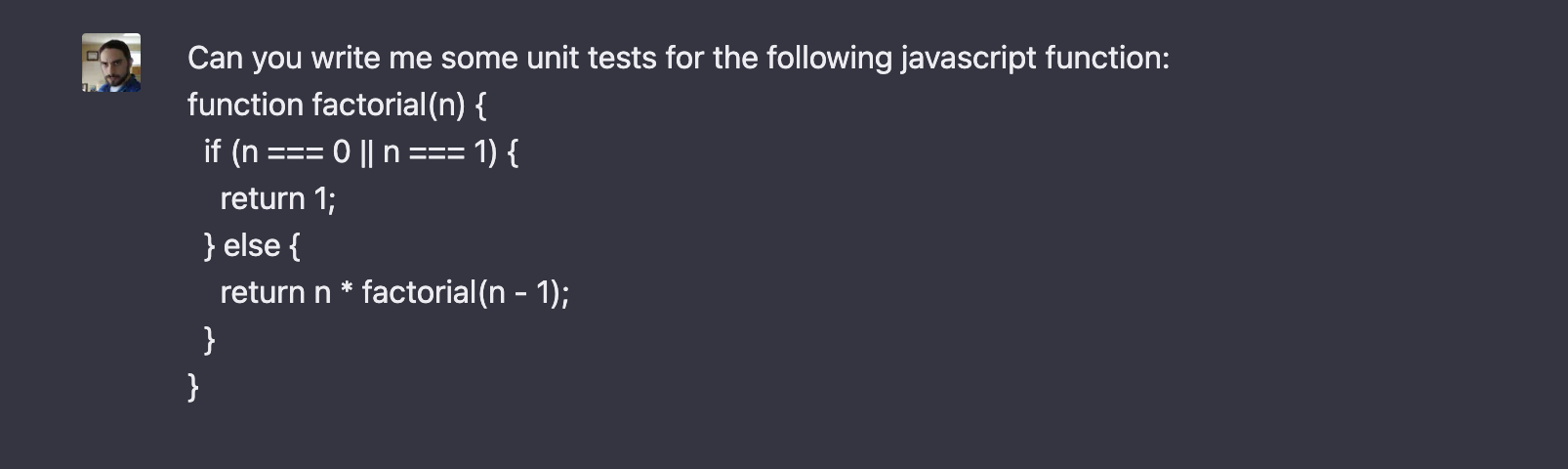

Let’s walk before we run, shall we? We’ll ask it to write some unit tests for a recursive function to see if it gets tripped up. The simplest recursive function I can think of is the factorial, something we should have all seen in CS101:

function factorial(n) {

if (n === 0 || n === 1) {

return 1;

} else {

return n * factorial(n - 1);

}

}

Simple enough, right? Now we’ll ask for some unit tests:

Prompt: Can you write me some unit tests for the following javascript function:

test("factorial of 0 is 1", () => {

expect(factorial(0)).toBe(1)

})

test("factorial of 1 is 1", () => {

expect(factorial(1)).toBe(1)

})

test("factorial of 5 is 120", () => {

expect(factorial(5)).toBe(120)

})

test("factorial of negative number throws an error", () => {

expect(() => factorial(-1)).toThrow()

})

Impressive, ChatGPT not only accounted for the special case of 0 factorial but also considered the possibility of negative integers and real numbers as input.

That gave us a decent idea as to how it will handle writing tests for a simple recursive function, now that we’re calibrated, let’s switch gears to a more practical example.

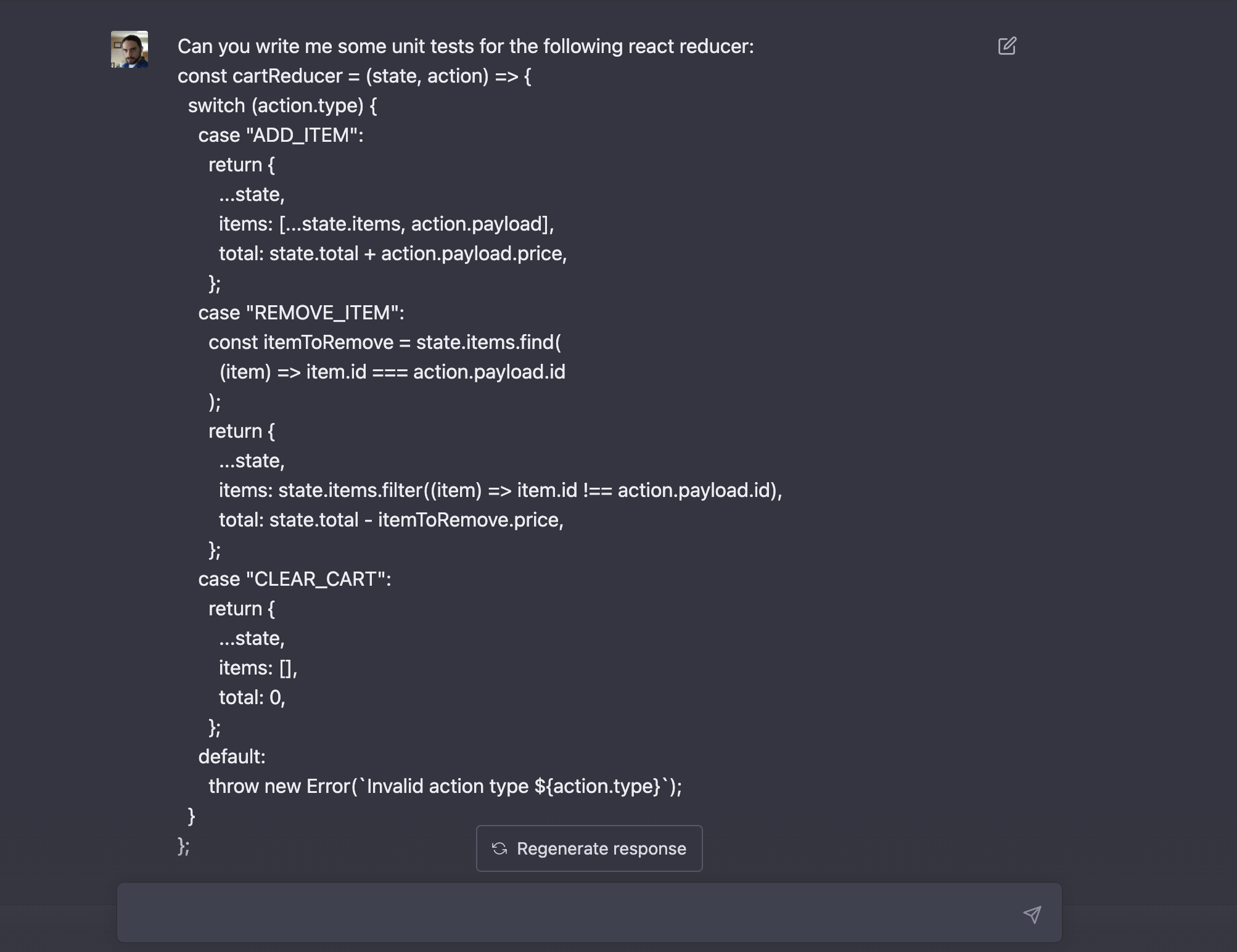

The following code snippet is a React reducer for an online shopping cart:

const cartReducer = (state, action) => {

switch (action.type) {

case "ADD_ITEM":

return {

...state,

items: [...state.items, action.payload],

total: state.total + action.payload.price,

};

case "REMOVE_ITEM":

const itemToRemove = state.items.find(

(item) => item.id === action.payload.id

);

return {

...state,

items: state.items.filter((item) => item.id !== action.payload.id),

total: state.total - itemToRemove.price,

};

case "CLEAR_CART":

return {

...state,

items: [],

total: 0,

};

default:

throw new Error(`Invalid action type ${action.type}`);

}

};

We can identify a few tests that need to be written by skimming through it. At a minimum, we’d want tests for adding items to the cart, removing them, and emptying the cart. Before we start writing anything ourselves, let’s turn to our trusty assistant, ChatGPT, and see if it agrees with us.

Prompt: Can you write me some unit tests for the following react reducer:

This is what ChatGPT generated:

describe("cartReducer", () => {

let initialState;

beforeEach(() => {

initialState = { items: [], total: 0 };

});

it("should handle ADD_ITEM action", () => {

const action = { type: "ADD_ITEM", payload: { id: 1, name: "Item 1", price: 10 } };

const newState = cartReducer(initialState, action);

expect(newState.items.length).toEqual(1);

expect(newState.items[0]).toEqual(action.payload);

expect(newState.total).toEqual(action.payload.price);

});

it("should handle REMOVE_ITEM action", () => {

const itemToRemove = { id: 1, name: "Item 1", price: 10 };

const action = { type: "REMOVE_ITEM", payload: itemToRemove };

const state = { items: [itemToRemove], total: itemToRemove.price };

const newState = cartReducer(state, action);

expect(newState.items.length).toEqual(0);

expect(newState.total).toEqual(0);

});

it("should handle CLEAR_CART action", () => {

const state = { items: [{ id: 1, name: "Item 1", price: 10 }], total: 10 };

const action = { type: "CLEAR_CART" };

const newState = cartReducer(state, action);

expect(newState.items.length).toEqual(0);

expect(newState.total).toEqual(0);

});

it("should throw an error for invalid action types", () => {

const action = { type: "INVALID_ACTION" };

expect(() => cartReducer(initialState, action)).toThrowError(

"Invalid action type INVALID_ACTION"

);

});

it("should throw an error for invalid action types", () => {

expect(() => cartReducer(initialState)).toThrowError(

"Invalid action type INVALID_ACTION"

);

});

});

Not only did it whip up some tests for adding, removing, and emptying the cart, but it also included tests for handling empty and invalid actions. Now all that’s left is to run these tests. However, keep in mind that since ChatGPT doesn’t have any knowledge of our specific file structure, we might need to make some minor adjustments in order to get everything running smoothly. Small price to pay I say.

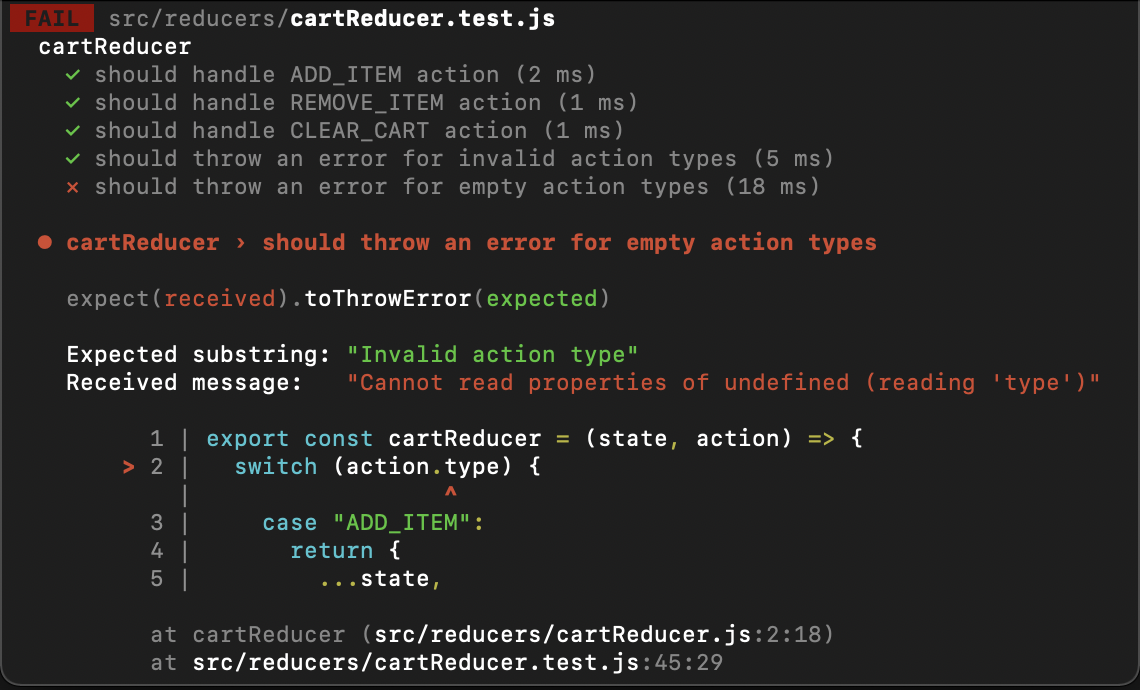

Let’s run these tests.

Looks like everything passed except for one test. Looking through the cartReducer code we can see why the test failed: The code we originally wrote doesn’t seem to be built to handle the case where an empty action has been passed to it and as a result we’re getting a null reference exception when we attempt to throw the invalid action error. While it can be considered a mistake on ChatGPTs part to create that unit test, it did alert us to a potential unhandled exception in our cartReducer code.

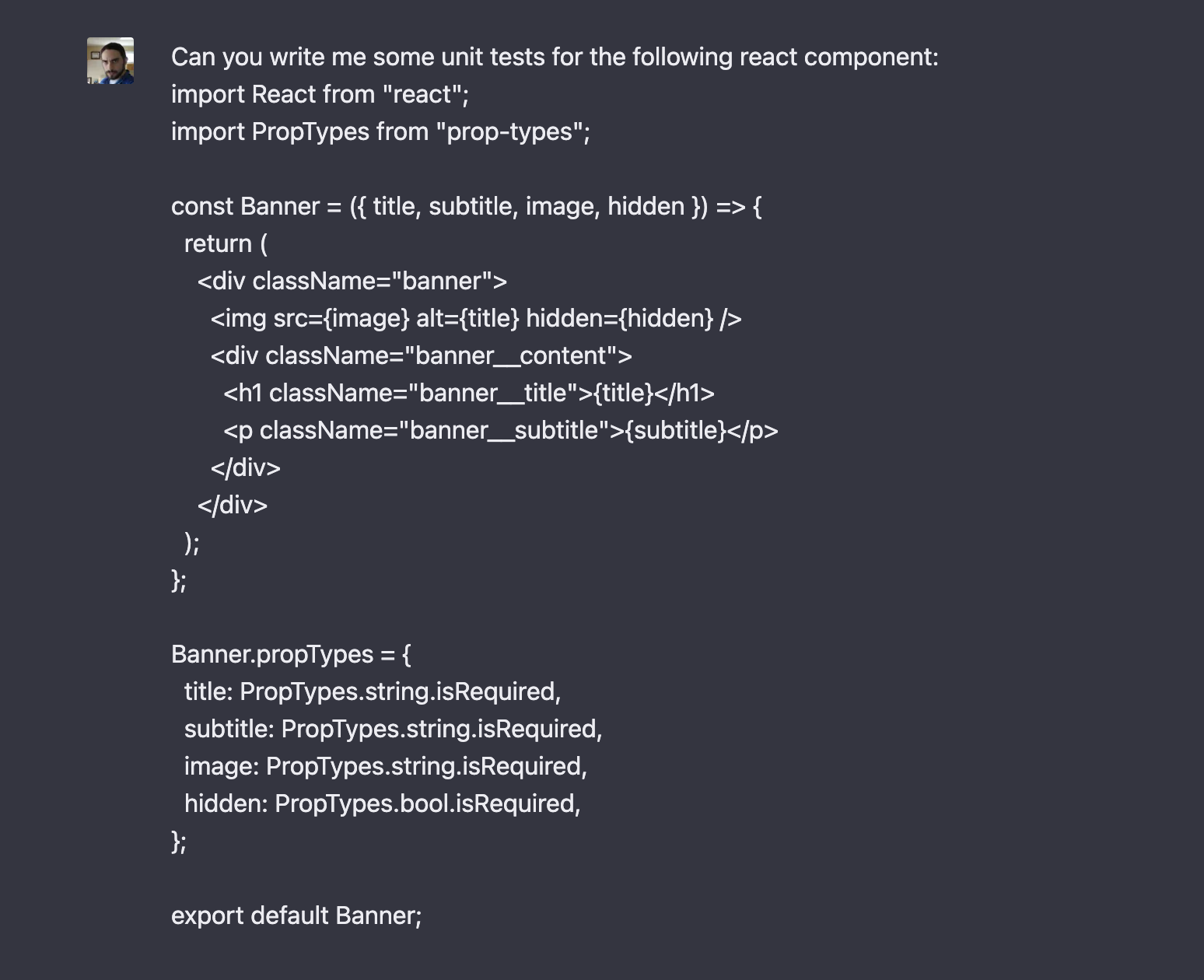

Let’s feed it a React component and see how it does. Here we have an Alert react component in Typescript.

import React from "react";

import PropTypes from "prop-types";

const Banner = ({ title, subtitle, image, hidden }) => {

return (

<div className="banner">

<img src={image} alt={title} hidden={hidden} />

<div className="banner__content">

<h1 className="banner__title">{title}</h1>

<p className="banner__subtitle">{subtitle}</p>

</div>

</div>

);

};

Banner.propTypes = {

title: PropTypes.string.isRequired,

subtitle: PropTypes.string.isRequired,

image: PropTypes.string.isRequired,

hidden: PropTypes.bool.isRequired,

};

export default Banner;

Let’s see how ChatGPT handles it.

Prompt: Can you write me some unit tests for the following React component:

import React from "react";

import { render, screen } from "@testing-library/react";

import Banner from "./Banner";

describe("Banner component", () => {

it("renders the title", () => {

render(

<Banner

title="Test Title"

subtitle="Test Subtitle"

image="test.png"

hidden={false}

/>

);

const titleElement = screen.getByText("Test Title");

expect(titleElement).toBeInTheDocument();

});

it("renders the subtitle", () => {

render(

<Banner

title="Test Title"

subtitle="Test Subtitle"

image="test.png"

hidden={false}

/>

);

const subtitleElement = screen.getByText("Test Subtitle");

expect(subtitleElement).toBeInTheDocument();

});

it("renders the image with the correct alt text", () => {

render(

<Banner

title="Test Title"

subtitle="Test Subtitle"

image="test.png"

hidden={false}

/>

);

const imageElement = screen.getByAltText("Test Title");

expect(imageElement).toBeInTheDocument();

expect(imageElement).toHaveAttribute("src", "test.png");

});

it("hides the image when hidden prop is true", () => {

render(

<Banner

title="Test Title"

subtitle="Test Subtitle"

image="test.png"

hidden={true}

/>

);

const imageElement = screen.getByAltText("Test Title");

expect(imageElement).not.toBeInTheDocument();

});

});

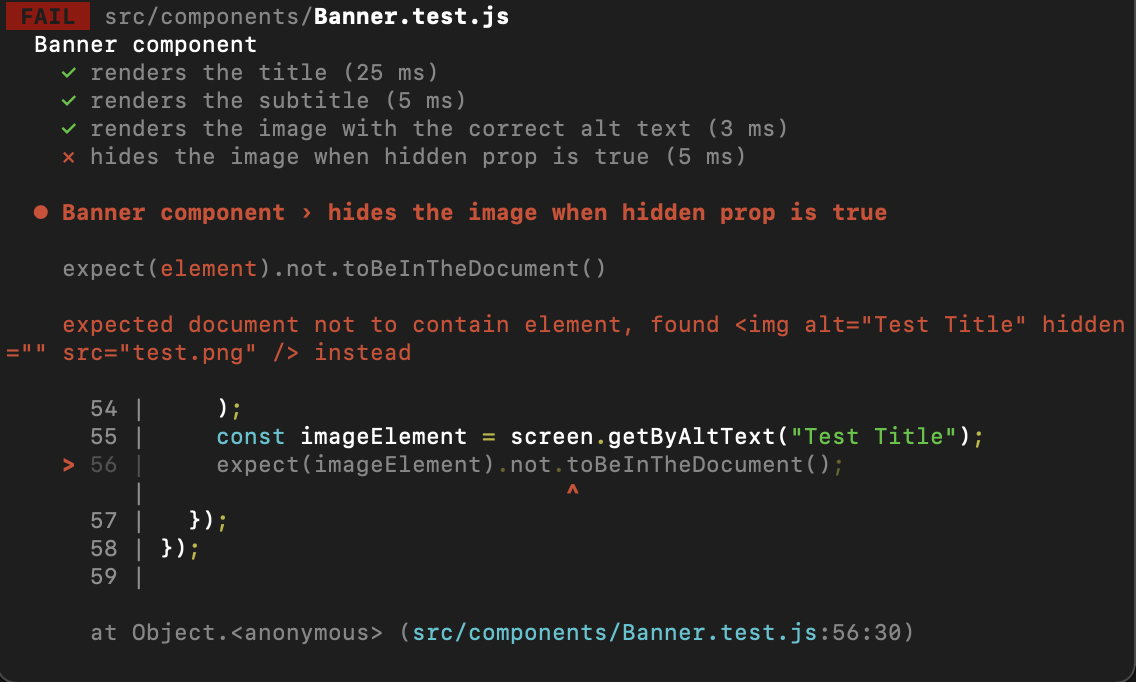

We’ll go ahead and run these tests.

A single test failed. ChatGPT assumed that toBeInDocument would return false when an element is hidden. toBeInDocument checks for whether the element is in the tree, regardless of visibility, so in this case, it’s actually returning true. ChatGPT should have used toBeVisible instead. Let’s go ahead and make that change and see if we can get that test to pass.

import React from "react";

import { render, screen } from "@testing-library/react";

import Banner from "./Alert";

describe("Banner component", () => {

it("renders the title", () => {

render(

<Banner

title="Test Title"

subtitle="Test Subtitle"

image="test.png"

hidden={false}

/>

);

const titleElement = screen.getByText("Test Title");

expect(titleElement).toBeInTheDocument();

});

it("renders the subtitle", () => {

render(

<Banner

title="Test Title"

subtitle="Test Subtitle"

image="test.png"

hidden={false}

/>

);

const subtitleElement = screen.getByText("Test Subtitle");

expect(subtitleElement).toBeInTheDocument();

});

it("renders the image with the correct alt text", () => {

render(

<Banner

title="Test Title"

subtitle="Test Subtitle"

image="test.png"

hidden={false}

/>

);

const imageElement = screen.getByAltText("Test Title");

expect(imageElement).toBeInTheDocument();

expect(imageElement).toHaveAttribute("src", "test.png");

});

it("hides the image when hidden prop is true", () => {

render(

<Banner

title="Test Title"

subtitle="Test Subtitle"

image="test.png"

hidden={true}

/>

);

const imageElement = screen.getByAltText("Test Title");

expect(imageElement).not.toBeVisible();

});

});

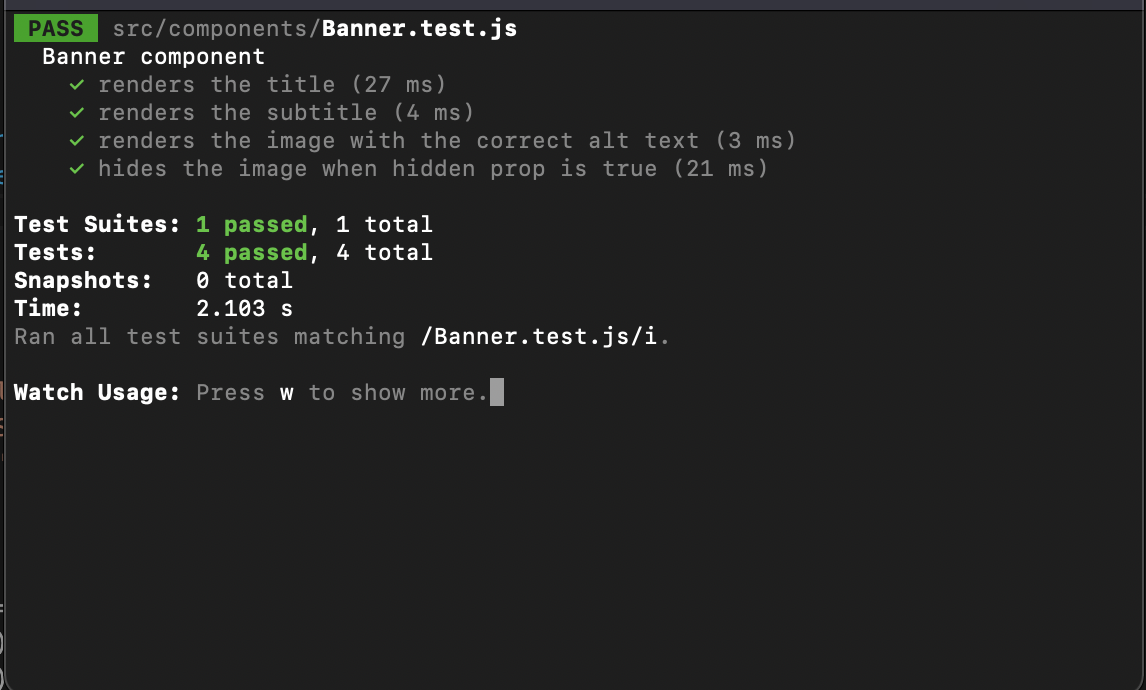

There we have it, the tests are all passing!

As with all AI-powered chatbots, certain limitations exist.

Let’s identify a few of those weaknesses so we can sleep more soundly at night:

- Lack of Creativity: Chatbots may be capable of mimicking human-like conversation, but they lack true creativity. They are machines and cannot produce truly original output or come up with novel design patterns or hyper-efficient algorithms.

- Reliance on Training Data: The accuracy of AI is heavily dependent on the quality of its training data. If the data is out-of-date or inaccurate, it will severely affect the performance of the AI and, subsequently, its output.

- Need for Human Correction: Although a clever prompt engineer may be able to coax decent output from the AI, this article demonstrated some of the errors that ChatGPT can make. This presents an interesting trade-off, as I imagine that there is a relationship between the complexity of our code and the type of mistakes ChatGPT will make. This will require a keen eye. It was fortunate that the mistakes ChatGPT made in this exercise were easy to spot.

While ChatGPT may not be ready to replace humans just yet, it’s clear that AI has the potential to revolutionize the way we live and work. As we continue to develop and use these tools, we can expect AI to become even more intelligent and capable. This presents an opportunity for developers to focus on the more challenging aspects of coding while leaving the repetitive tasks to our AI assistants.