Photo-Illustration: Intelligencer; Photo: WildChat/The Allen Institute for AI

A recent research paper sounded an alarm for AI developers: Training data is drying up. Concerns about theft, copyright, and commercial competition are leading public and semi-public resources to tighten protections against AI scraping. The result, the paper’s authors argue, “will impact not only commercial AI, but also non-commercial AI and academic research” by “biasing the diversity, freshness, and scaling laws for general-purpose AI systems.” It’s an interesting problem that’s already starting to bite. It was also probably inevitable. A bunch of companies are raising and spending money to steal data, and the people and companies they’re stealing from are not pleased.

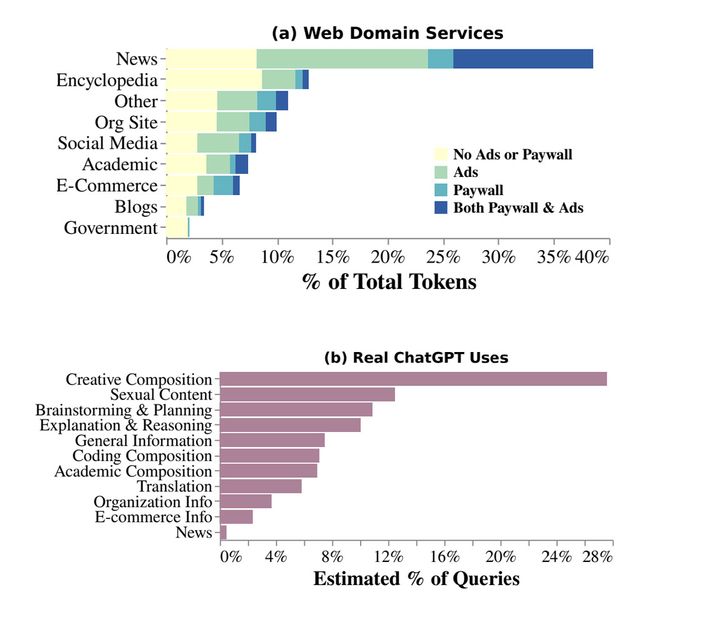

Deeper in the report, researchers for the Data Provenance Institute identified another problem: Not only is the data drying up, but what remains available is out of step with what AI companies need most, at least according to their users. They included this set of charts:

Photo-Illustration: Intelligencer; Photo: The Data Provenance Institute

AI companies are training on a ton of news and encyclopedia content, in large part because that’s what’s available to scrapers in great quantities. (In the top chart, “tokens” can be understood as units of training data in sampled sources.) Meanwhile, actual ChatGPT users are barely engaging with news at all. In reality, they’re asking ChatGPT to write stories, often of a sexual nature. They’re asking it for ideas, for assistance with research and code, and for help with homework. But, again, they’re very horny. This is, as the paper notes, an issue for model training, accuracy, and bias: People aren’t using these things in ways that match the data on which they’re trained, and AI model performance is very much determined by the quality and quantity of training data. It’s also out of step with a lot of the discourse around AI, in which concerns about the news, disinformation, and the media in general have played — for reasons both novel and obvious — an outsize role. ChatGPT users are asking a newsbot to write erotic fiction. Not ideal!

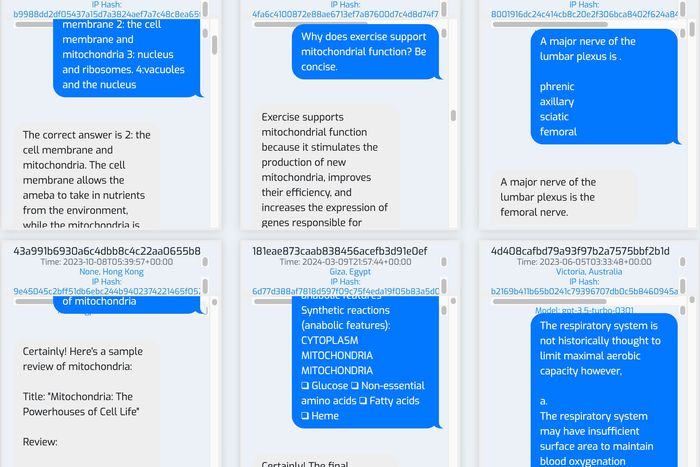

But wait — since when do we know how people are actually using ChatGPT? OpenAI doesn’t share data like this, which would be extremely valuable to people trying to figure out what’s going on with the company and with AI in general. On this, the Data Provenance Institute cites WildChat, a project from the Allen Institute for AI, a nonprofit funded by Microsoft co-founder Paul Allen. Researchers “offered free access to ChatGPT for online users in exchange for their affirmative, consensual opt-in to anonymously collect their chat transcripts,” resulting in a data set of “1 million user-ChatGPT conversations.” These conversations aren’t perfectly representative of ChatGPT use — researchers warn that because of where it was provided, and the fact that it allowed for anonymous use, it probably overselected for tech-inclined users and people who “prefer to engage in discourse they would avoid on platforms that require registration.” In any case, these conversations are searchable, and they’re some of the most illuminating things I’ve ever seen on the question of what people actually expect from their chatbots.

To get this one out of the way, the horniness is relentless — search any explicit term and you’ll get hundreds of conversations in which persistent users are trying (and usually failing) to get ChatGPT to write erotic stories about video-game characters, celebrities, or themselves. There’s a huge amount of “explanation” that’s very clearly just help with schoolwork — a fascinating Washington Post analysis of the data found that about one in six conversations was basically about homework:

Photo-Illustration: Intelligencer; Photo: WildChat/Allen Institute forAI

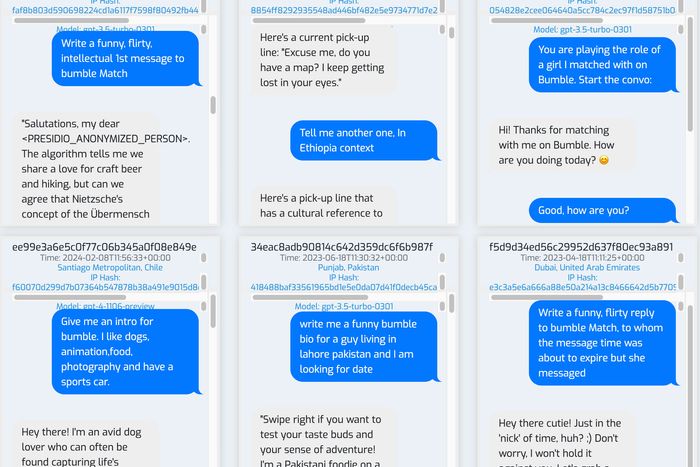

There’s also a great deal of assistance with interpersonal issues and communication: help writing messages for work and school, but also dating apps:

Photo-Illustration: Intelligencer; Photo: WildChat/Allen Institute forAI

Again, if you’re not sure what people are getting from services like ChatGPT, and trying them yourself hasn’t helped, poke around here for a while. It’s probably not ideal for OpenAI (and others) that users spend so much time trying to coax chatbots into doing things they’re not supposed to, or into helping them do things that they’re not supposed to, but the broader sense you get from these interactions is that, generally, a lot of ChatGPT users expect the chatbot to be capable of a really wide range of things — they treat it like a more comprehensive resource than it probably is and more like a person — which indicates belief, trust, and plausible demand.

Glimpses into real user habits for new technologies are pretty rare — the last time I remember being able to eavesdrop such strange, rich, and occasionally bracing material like this was when AOL released a massive cache of search logs back in 2006, revealing that its users were talking to the search engine, revealing incredibly poignant and sometimes dark secrets in the course of something like — but also clearly unlike — conversation. (It doesn’t take long to find similarly moving material in the anonymized WildChat records; similarly, while the data has been cleaned somewhat, it’s easy to find intensely distressing sexual and violent requests.)

The main takeaway then was that people were ready to place a great deal of trust in open text boxes, and that “search,” for a lot of users, was something more like an all-purpose companion, a box into which they could put anything and frequently get at least something back — in the broadest possible sense, a bullish outlook for then-rising companies like Google. The new text boxes actually pretend to have conversations with you, and users are responding with similarly extreme candor. They’re behind on their work. And they’d like to read some porn.

Source link